03.01.2020

Written by Dr. Janak Gunatilleke, Mirantha Jayathilaka and CD Athuraliya

During the holiday season, we got the chance to reflect on NHSX’s report Artificial Intelligence: How to get it right. We would like to focus on two of the key themes that emerged which have a non-technical focus, namely — openness and listening to users.

At Mindwave, we firmly believe that focus and investment in openness and listening will enable faster and more widespread adoption of AI in the NHS. In this post we argue for three key enablers: user-centered design; open technical standards and data formats, enabling data portability and interoperability; explainability of how an algorithm makes decisions, including what each stakeholder groups would like to see in terms of ‘rationale’ and ‘information’.

The NHSX report

This comprehensive report looks at the current state of play of AI technologies within the NHS and considers what further work, including policy work, needs to be done to scale up the impact and benefits in a safe, ethical and effective manner. It expands on the underlying principles for developing the NHS AI lab, including creating a safe environment for testing AI technologies and inspecting algorithms already in use by the NHS.

The report considers challenges in doing so and suggests mitigating actions in five key areas — leadership & society; skills & talent; access to data; supporting adoption; and international engagement.

Trust and technology adoption in healthcare

Technology adoption in healthcare has always gone beyond the technology or the solution, to take into account how stakeholders and users perceive it affects and / or helps them.

AI is no different; for example, how do healthcare professionals think it helps / hinders them? Do healthcare professionals trust it? Do patients think it treats them fairly, etc?

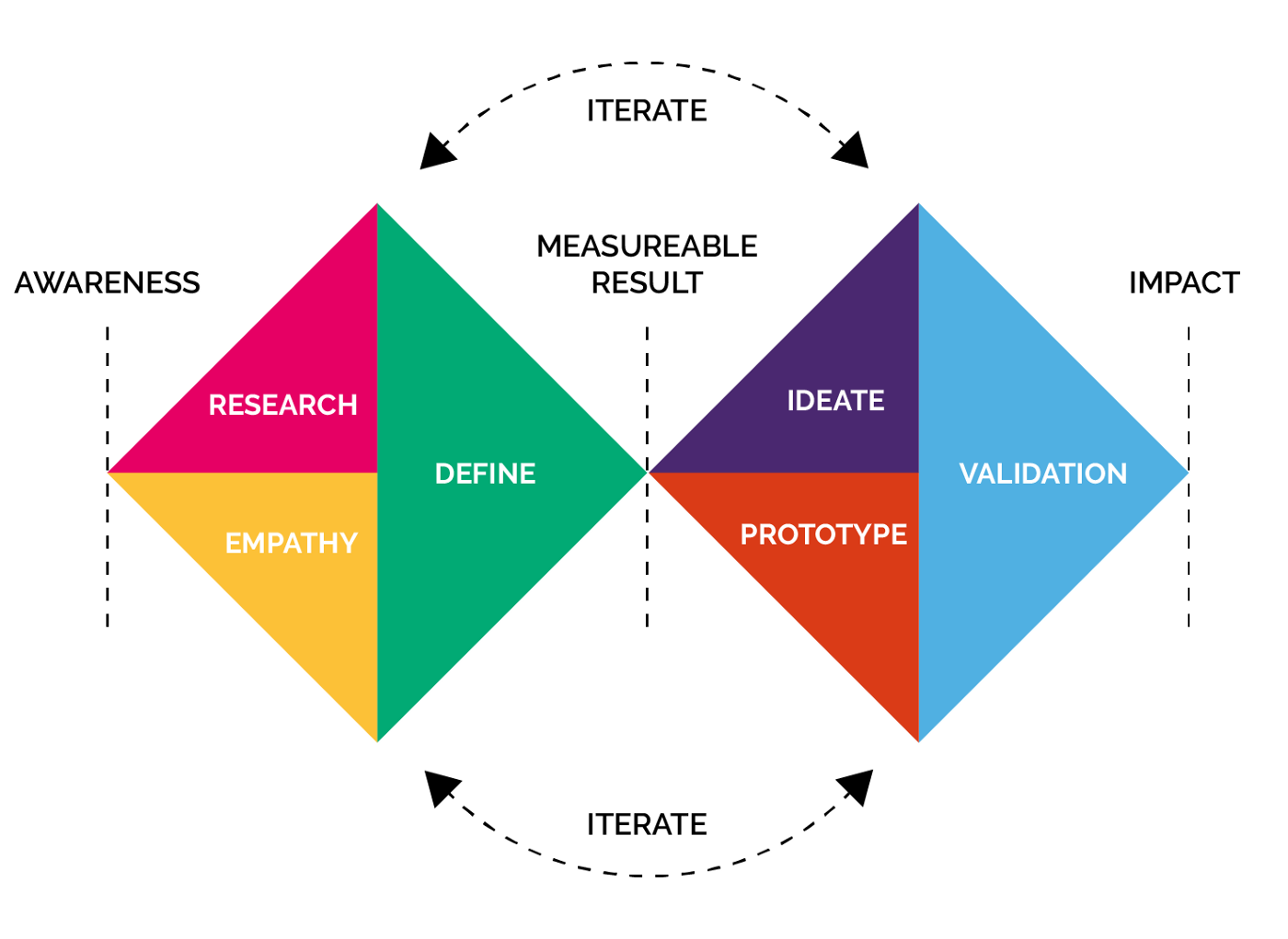

Figure 1: Trust is key to healthcare technology adoption

At Mindwave, we believe trust is a key success factor in the successful adoption of AI-based solutions in the NHS and in the wider healthcare sphere. High profile failures, such as the closure of the care.data programme, highlight the critical importance of trust [1].

Based on our experience from the NHS frontline, the global health tech industry and from AI technology and research roles, we wanted to examine two of the above five key areas highlighted in the report that pertain specifically to trust and share my thoughts. The two key areas are access to data and supporting adoption.

This also directly relates to many of the behaviours set out in the Code of conduct for Data-Driven Health and Care Technology (also referred to within the NHSX report). The most relevant of these are listed below:

Key area 1 from the report | Access to data

Going back to the first focus point above, Access to data; which is further described in the NHSX article as facilitating legal, fair, ethical and safe data sharing that is scalable and portable to stimulate AI technology innovation.

Personal Health Record (PHR) solutions support users access and develop their health and wellbeing data. We have highlighted in a recent article how we think PHRs can help maximise the value of health data — key points include:

NHSX has already laid down foundations to promote open data and technical standards including Fast Healthcare Interoperability Resources (FHIR). Semantic web technologies such as Resource Description Framework (RDF) presents an interesting angle on how common vocabularies and data models can be developed enabling more efficient data sharing and linking [2]. Furthermore, repositories such as BioPortal collects biomedical ontologies, aiming for knowledge and data to be interoperable for furthering biomedical science and clinical care. It is important that we move away from proprietary data storage formats and adopt standardised structures. For example, Data archetypes [3] — a specification for the various data elements that need to be captured — can provide consistency and improve the ability to share data across systems.

A recent article by Panch et al. neatly summarises some of the key issues we face currently [4]. First is the limited availability of data to validate and generalise algorithms that have been trained on narrow datasets (for example, caucasion patients). Second, is the variable quality of the data within Electronic Health Records (the most valuable data store in healthcare) and the challenges (the lack of) interoperability presents in linking and transferring data.

Key area 2 from the report | Supporting adoption

The second focus area is supporting adoption, which is further described as driving public and private sector adoption of AI technologies that are good for society. This is tightly linked to the ‘algorithmic explainability’ (principle 7) in the Code of Conduct above.

We believe supporting this adoption requires two key sub-elements:

(a) User insights

The first is User insights.

From the processes highlighted in the report, a few points strikes us as being very important; for example, identifying what is meant as a meaningful explanation to each stakeholder group, developing meaningful language, engagement through appropriate activities (for example, talking to users, citizen juries) and monitoring user reactions (for example, user testing).

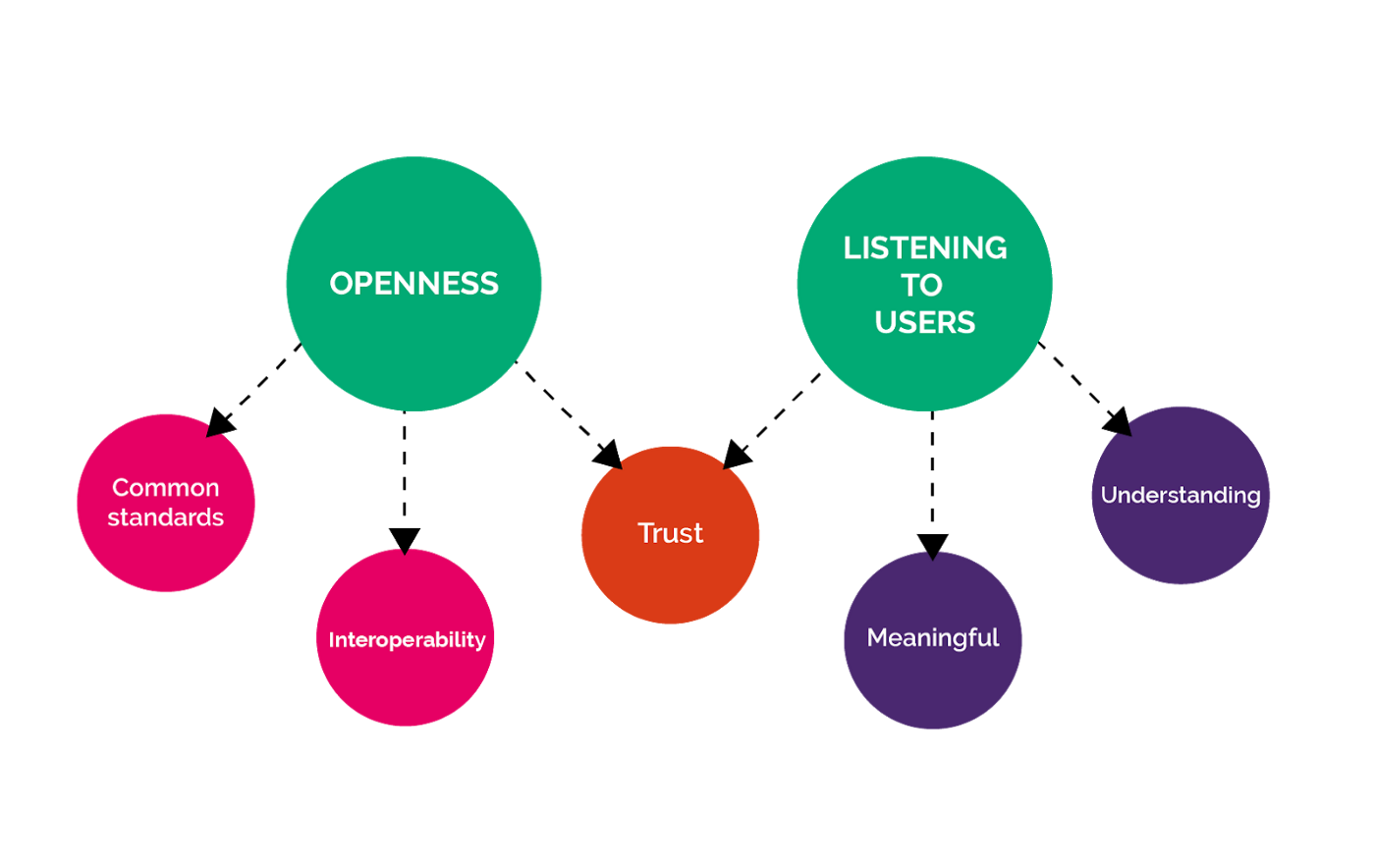

Design can be compared to an algorithm, for example how you do something or what ‘lies underneath’. Using an iterative, human-centred approach to designing and developing AI based solutions can help gain valuable insights. For example, at Mindwave we follow the double diamond approach from the Design Council.

Figure 2: Iterative human-centered design

Integrating resulting tools also requires thoughtful design to ensure diagnostic accuracy as well as minimising any adverse impact on the workflow efficiency [5]. As mentioned before, key questions to ask could include ‘would the healthcare professionals view the tools as a threat?’ and ‘how disruptive are the tools to their day-to-day work?’.

(b) Explainability

The second is Explainability.

Algorithm bias can be a significant issue in healthcare AI solutions, as evidenced by a recent study published in Science [6]. This study reviewed an algorithm widely used within the US health system, where health costs are used as a proxy for need. Due to the lesser amount spent on black patients, the algorithm identified less than 40% of the black patients that needed care.

What we believe is missing in the NHSX report is the importance of how the algorithm made the decision it did. This ‘understanding’ is becoming increasingly more important. For example, a recent US study utilised AI to review 1.77 million electrocardiogram (ECG) results from nearly 400,000 people in order to identify patients who may be at increased risk of dying [7]. Although it performed better than doctors, the study didn’t highlight what patterns the algorithm was basing its predictions to enable this insight to be incorporated into clinical practice, in order to improve predictions.

Figure 3: Explainability promotes acceptance and identification of further solutions

Image by LinkedIn Sales Navigator from Pexels

There are now cross-industry initiatives, such as the Partnership on AI which seeks to promote the transparency of machine learning models. Models included within the initiative include Google Model cards and the Microsoft Face API. These are great, however, they do not address ‘custom’ models and algorithms used within various solutions including those developed by health tech start-ups.

The take on explainability by IBM research is quite clear [8];

“When it comes to understanding and explaining the inner workings of an algorithm, different stakeholders require explanations for different purposes and objectives. Explanations must be tailored to their needs. A physician might respond best to seeing examples of patient data similar to their patient’s. On the other hand, a developer training a neural net will benefit from seeing how information flows through the algorithm. While a regulator will aim to understand the system as a whole and probe into its logic, consumers affected by a specific decision will be interested only in factors impacting their case.”

Currently, simple visualisation techniques such as the ‘What-if’ tool developed by the People + AI Research (PAIR) at Google provides an easy to use visual interface with minimal coding required to understand more about the machine learning model used [9]. For example, it enables various functionality, including comparing two different models, understanding the importance of various ‘attributes’ of the dataset on the output and the effect on the output of modifying or deleting a datapoint from the dataset. Another technique explains how visually combining different interpretability elements such as what features algorithms are looking for (feature visualisation) and how important they are to the output (attribution) can provide powerful insights on how the algorithm identifies between possibilities [10].

According to current research, a promising path to improve explainability in ‘black-box’ AI algorithms is the integration of explicit structured knowledge with data-driven learning-based algorithms. This can also be seen as aligning the algorithms to base predictions on the ground theories/rules laid by its designers. Thus, the decisions of the algorithms can be explained using the knowledge that it was taught explicitly.

One other area of interest in the research front on explainability is often known as the study of disentangled representations. This area looks at breaking-down the prediction space to identify distinct features, in-turn giving the user of the algorithm an idea on what specific features are identified by the algorithm in making a decision.

In summary, all of the above points can be summarised under two ‘themes’ — openness and listening to users:

Figure 4: Openness and listening to users — impact on AI adoption in the NHS

The future

At Mindwave, we believe that focus and investment in the above two themes will enable faster and more widespread adoption of AI in the NHS.

We recommend the following:

If you have any feedback or would like to chat about any of the above points, please drop Janak an email via janak@mindwaveventures.com

References

[1] https://www.wired.co.uk/article/care-data-nhs-england-closed

[2] https://yosemiteproject.org/webinars/why-rdf.pdf

[4] Panch, T., Mattie, H. and Celi, L.A., 2019. The “inconvenient truth” about AI in healthcare. Npj Digital Medicine, 2(1), pp.1–3

[5] https://ai.googleblog.com/2019/12/lessons-learned-from-developing-ml-for.htm

[6] Obermeyer, Z., Powers, B., Vogeli, C. and Mullainathan, S., 2019. Dissecting racial bias in an algorithm used to manage the health of populations. Science, 366(6464), pp.447–453

[8] https://www.research.ibm.com/artificial-intelligence/trusted-ai/